Author: Konstantinos Bessas, CTO AWS at CloudNation

Even though I couldn’t make it to AWS re:Invent 2024 in person this year, I’ve been following the updates closely. With the event halfway through, there’s already plenty to talk about - new services, enhancements to existing tools, and some interesting themes emerging from the keynotes. In this post, I’ll share some of the highlights that intrigued me so far and what they might mean for those of us working with AWS every day.

As done previously, I will split the information shared in this post in the high level categories that AWS recognizes. With no further ado, let’s dive into it!

Application Integration

AWS has announced new features that allow customers to share resources like Amazon EC2 instances, Amazon ECS and EKS container services, and HTTPS services across Amazon VPC and AWS account boundaries. This enables the building of event-driven applications via Amazon EventBridge and orchestration of workflows with AWS Step Functions. These features leverage Amazon VPC Lattice and AWS PrivateLink, providing more options for network design and integration across technology stacks.

The key benefits for AWS customers include:

- Simplified Modernization: Accelerate modernization efforts by integrating cloud-native apps with on-premises legacy systems.

- Enhanced Integration: Seamlessly connect public and private HTTPS-based applications into event-driven architectures and workflows.

- Efficiency: Replace complex data transfer methods with simpler, more efficient solutions.

These updates aim to help customers drive growth, adapt to the cloud, and reduce costs while meeting security and compliance requirements. Read more about this announcement here.

Containers

Businesses should focus on promoting the business value and not on operational tasks that can be offloaded to a managed services partner or by trying to achieve a no-ops model using key cloud services like the one described below.

AWS announced the general availability of Amazon Elastic Kubernetes Service (Amazon EKS) Auto Mode. This new capability streamlines Kubernetes cluster management for compute, storage, and networking, from provisioning to ongoing maintenance with a single click.

Key Benefits for AWS Customers:

- Higher Agility and Performance: By eliminating the operational overhead of managing cluster infrastructure, businesses can achieve greater agility and performance.

- Cost-Efficiency: EKS Auto Mode continuously optimizes costs, helping businesses manage their budgets more effectively.

- Simplified Management: Automates cluster management without requiring deep Kubernetes expertise, selecting optimal compute instances, dynamically scaling resources, managing core add-ons, patching operating systems, and integrating with AWS security services.

- Enhanced Operational Responsibility: AWS takes on more operational responsibility, configuring, managing, and securing the AWS infrastructure in EKS clusters, allowing customers to focus on building applications that drive innovation.

- Support for AI Workloads: Reduces the work required to acquire and run cost-efficient GPU-accelerated instances, ensuring generative AI workloads have the necessary capacity.

Amazon EKS Auto Mode is now available in all commercial AWS Regions except China Regions where Amazon EKS is available. It supports Kubernetes 1.29 and above, with no upfront fees or commitments. Customers pay for the management of the compute resources provisioned, in addition to regular EC2 costs. You can read more about this announcement here.

Databases

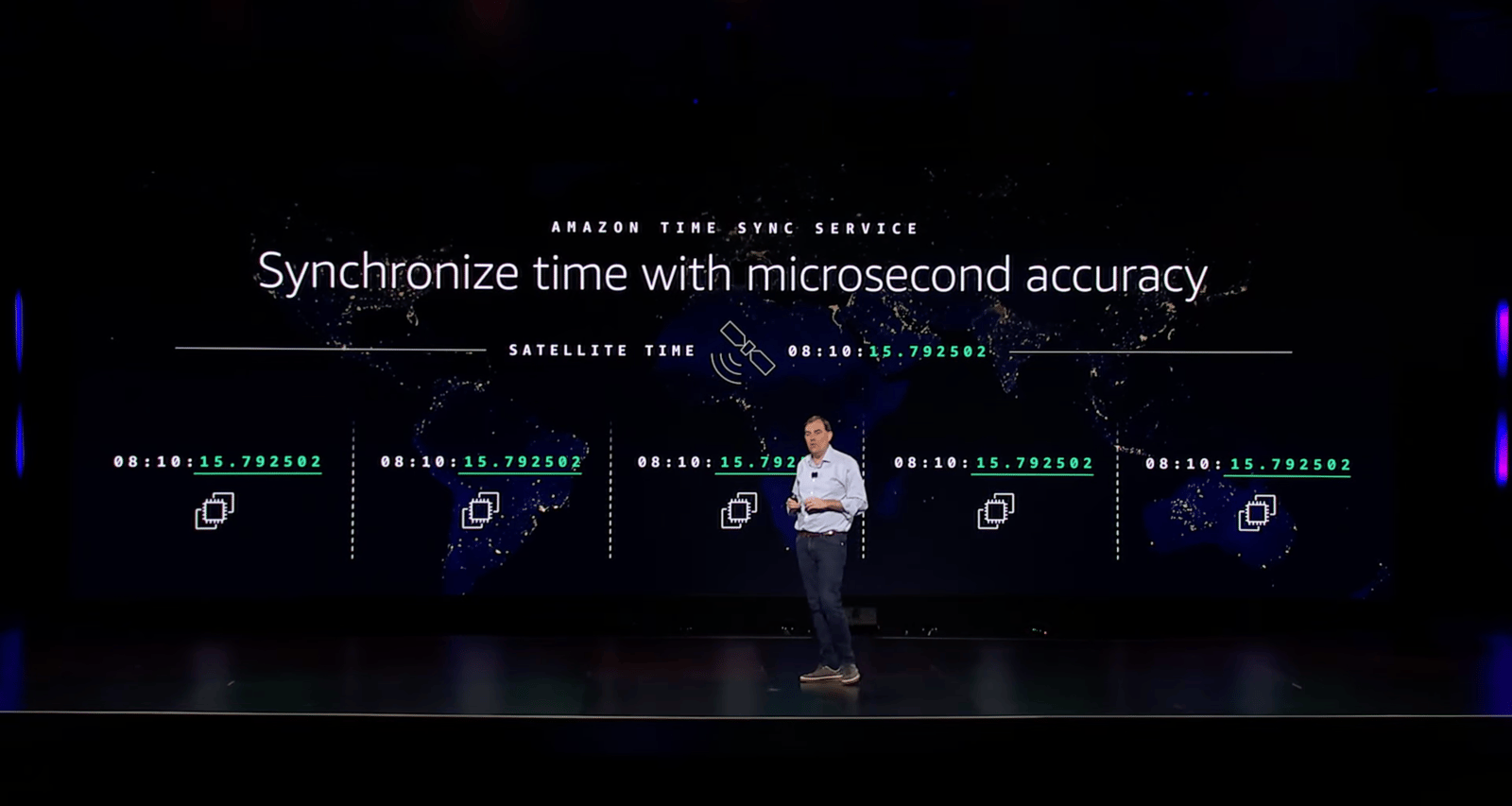

Matt Garman discussed in his keynote on Tuesday the challenges of distributed systems, particularly the difficulty of keeping distributed databases in sync, especially over long distances. Providing highly available applications while maintaining low latency reads and writes across AWS Regions is a common challenge faced by many customers.

Accessing data from different Regions can cause delays of hundreds of milliseconds compared to microseconds within the same Region. The necessity for developers to create complex custom solutions for data replication and conflict resolution can lead to increased operational workload and potential errors. Beyond multi-Region replication, these customers have to implement manual database failover procedures and ensure data consistency and recovery to deliver highly available applications and data durability.

Amazon Web Services (AWS) announced the general availability of Amazon MemoryDB Multi-Region, a fully managed, active-active, multi-Region database that you can use to build applications with up to 99.999 percent availability, microsecond read, and single-digit millisecond write latencies across multiple AWS Regions. MemoryDB Multi-Region is available for Valkey, a Redis Open Source Software (OSS) drop-in replacement stewarded by the Linux Foundation. This new feature builds upon the existing benefits of Amazon MemoryDB, such as multi-AZ durability and high throughput across multiple AWS Regions, and addresses these common challenges faced by many customers.

Benefits of MemoryDB Multi-Region:

- High availability and disaster recovery: Build applications with up to 99.999 percent availability. If an application cannot connect to MemoryDB in a local Region, it can connect to MemoryDB from another AWS Regional endpoint with full read and write access. MemoryDB Multi-Region will automatically synchronize data across all AWS Regions when the application reconnects to the original endpoint.

- Low latency: Serve both reads and writes locally from the Regions closest to your customers with microsecond read and single-digit millisecond write latency at any scale. Data is typically propagated asynchronously between AWS Regions in less than one second.

- Compliance and regulatory adherence: Meet compliance and regulatory requirements by choosing the specific AWS Region where your data resides.

You can read more about this announcement here.

AWS also announced the Amazon Aurora DSQL, a new serverless distributed SQL database designed for always-available applications. Aurora DSQL offers virtually unlimited scale, highest availability, and zero infrastructure management. It can handle any workload demand without the need for database sharding or instance upgrades.

Key benefits for AWS customers include:

- High Availability: Aurora DSQL is designed for 99.99% availability in single-Region configurations and 99.999% in multi-Region configurations.

- Scalability: It scales automatically to meet workload demands, eliminating the need for manual scaling.

- Ease of Management: The serverless design removes the operational burden of patching, upgrades, and maintenance.

- PostgreSQL Compatibility: Developers can use familiar PostgreSQL tools and frameworks, making it easy to build and deploy applications.

Aurora DSQL's innovative active-active architecture ensures continuous availability and strong data consistency, making it ideal for building resilient, high-performance applications. You can read more about this announcement here.

Looking forward to hearing more about the distributed database challenges in Dr. Werner Vogels’ keynote on Thursday.

Developer Tools

Wow, more than 30 minutes of Matt Garman’s keynote was reserved on Amazon Q Developer and the latest additions to its capabilities. Let’s see the ones that I think really stand out.

Amazon Q Developer agent capabilities include generating documentation, code reviews, and unit tests

AWS expanded Amazon Q Developer with three new features:

- Enhanced documentation: Automatically generate comprehensive documentation within your IDE.

- Code reviews: Detect and resolve code quality and security issues.

- Unit tests: Automate the creation of unit tests to improve test coverage.

The new features of Amazon Q Developer enhance productivity by automatically generating comprehensive documentation for newly produced code but also existing codebases, saving developers time and ensuring up-to-date information. Automated code reviews improve code quality and security by detecting issues early, while automated unit test generation increases test coverage and reliability. These capabilities streamline the development process, allowing developers to focus more on creating new features and less on repetitive tasks. You can read more about this announcement here.

Investigate and remediate operational issues with Amazon Q Developer

AWS has announced a new capability in Amazon Q Developer, now in preview, designed to help customers investigate and remediate operational issues using generative AI. This new feature automates root cause analysis and guides users through operational diagnostics, leveraging AWS's extensive operational experience. It integrates seamlessly with Amazon CloudWatch and AWS Systems Manager, providing a unified troubleshooting experience. This capability aims to simplify complex processes, enabling faster issue resolution and allowing customers to focus on innovation. Currently, it is available in the US East (N. Virginia) Region.

This and similar products in Generative AI sphere is and will be transforming Managed Services Providers (MSPs) and platform engineering teams by automating routine tasks, enhancing scalability, and improving efficiency while reducing operational costs.

You can read more about this announcement here.

Generative AI / Machine Learning

Next generation of Amazon SageMaker

AWS has announced the next generation of Amazon SageMaker, a comprehensive platform for data, analytics, and AI. This new version integrates various components needed for data exploration, preparation, big data processing, SQL analytics, machine learning (ML) model development, and generative AI application development. The existing Amazon SageMaker has been renamed to Amazon SageMaker AI, which is now part of the new platform but also available as a standalone service.

Key Highlights:

- SageMaker Unified Studio (preview): A single environment for data and AI development, integrating tools from Amazon Athena, Amazon EMR, AWS Glue, Amazon Redshift, and more.

- Amazon Bedrock IDE (preview): Updated tools for building and customizing generative AI applications.

- Amazon Q: AI assistance integrated throughout the SageMaker workflows.

Value for AWS Customers:

- Unified Environment: Simplifies the development process by bringing together data and AI tools in one place.

- Enhanced Capabilities: Offers advanced tools for data processing, model development, and generative AI, improving efficiency and scalability.

- Flexibility: Allows customers to use SageMaker AI as part of the unified platform or as a standalone service, catering to different needs.

This update aims to streamline workflows and enhance the capabilities available to AWS customers, making it easier to build, train, and deploy AI and ML models at scale. Read more about the release here.

Amazon Nova

Andy Jassy, welcome back to re:Invent! He delivered a presentation (more of a mini-keynote) within Matt Garman's keynote. And it came with announcements!

Amazon has announced Amazon Nova, a new generation of foundation models available exclusively in Amazon Bedrock. These models offer state-of-the-art intelligence and industry-leading price performance, designed to lower costs and latency for various generative AI tasks. Amazon Nova can handle tasks such as document and video analysis, chart and diagram understanding, video content generation, and building sophisticated AI agents. There are two main categories of models: understanding models and creative content generation models. The understanding models include Amazon Nova Micro, Lite, Pro, and the upcoming Premier, which process text, image, or video inputs to generate text output. The creative content generation models include Amazon Nova Canvas for image generation and Amazon Nova Reel for video generation.

Amazon Nova models can be fine-tuned with text, image, and video inputs to meet specific industry needs, making them highly adaptable for various enterprise applications. Built-in safety controls and content moderation capabilities ensure responsible AI use, with features like digital watermarking. For AWS customers, Amazon Nova offers cost efficiency, as the models are optimized for speed and cost, making them a cost-effective solution for AI tasks. These models set new standards in multimodal intelligence and agentic workflows, achieving state-of-the-art performance on key benchmarks. Supporting over 200 languages, Amazon Nova enables the development of global applications without language barriers. Integration with Amazon Bedrock simplifies deployment and scaling, with features like real-time streaming, batch processing, and detailed monitoring. Read more about the release here.

Storage

Amazon has introduced Amazon S3 Tables, a new feature designed to optimize storage for tabular data such as daily purchase transactions, streaming sensor data, and ad impressions. These tables use the Apache Iceberg format, enabling easy queries with popular engines like Amazon Athena, Amazon EMR, and Apache Spark. Key highlights include:

- Performance: Up to 3x faster query performance and up to 10x more transactions per second compared to self-managed table storage.

- Efficiency: Fully managed service that handles operational tasks, providing significant operational efficiency.

Amazon S3 Tables are stored in a new type of S3 bucket called table buckets. These buckets act like an analytics warehouse, capable of storing Iceberg tables with various schemas. They offer the same durability, availability, scalability, and performance characteristics as standard S3 buckets, while also automatically optimizing storage to maximize query performance and minimize costs.

Each table bucket is region-specific and has a unique name within the AWS account. These buckets use namespaces to logically group tables, simplifying access management. The tables themselves are fully managed, with automatic maintenance tasks such as compaction, snapshot management, and removal of unreferenced files, ensuring that users can focus more on their data rather than on maintenance.

Integration with AWS services is a key feature of Amazon S3 Tables. Currently in preview, the integration with AWS Glue Data Catalog allows users to query and visualize data using AWS Analytics services like Amazon Athena, Amazon Redshift, Amazon EMR, and Amazon QuickSight. The table buckets support relevant S3 API functions and ensure security by automatically encrypting all stored objects and enforcing Block Public Access. Read more about this announcement here.

AWS is further using the release above for providing further value to it’s customers: Introducing queryable object metadata for Amazon S3 buckets.

It automatically generates rich metadata for objects when they are added or modified. This metadata is stored in fully managed Apache Iceberg tables, allowing customers to use tools like Amazon Athena, Amazon Redshift, Amazon QuickSight, and Apache Spark to efficiently query and find objects at any scale.

This new feature simplifies the process of managing and querying metadata, making it easier for AWS customers to locate the data they need for analytics, data processing, and AI training workloads. Additionally, Amazon Bedrock will annotate AI-generated video inference responses with metadata, helping users identify the content and the model used.

Overall, this enhancement aims to reduce the complexity and improve the scalability of systems that capture, store, and query metadata, providing significant value to AWS customers. Read more about this announcement here.

To be continued...

That has been something so far. As we take a moment to absorb everything that's happened so far at AWS re:Invent 2024, it's clear that this year's event is already shaping up to be even more exciting than 2023. The CEO's keynote has introduced some impressive new technologies and strategies. With so much already revealed, we're looking forward to what the second half of the event will bring. Stay tuned for more updates and insights.