Let me try to explain. Having worked many years as a network engineer/designer/consultant I was used to designing and building a network from scratch. Unpacking boxes, connecting cables, upgrading firmware and most of all creating the right configuration to make the network behave exactly like you want it to. Vlans, trunks, BPDUguard, Quality of Service and routing stuff like OSPF areas, or more recent technologies like VXlan, anycast or SDwan.

We had to think of and create it all ourselves. A new requirement for an existing network environment might require some whitepaper reading, whiteboard drawings and experimenting, more often than not the clever network engineer would be able to make it happen.

Even with the more recent networking products like ACI and NSX the creation of configurations has been virtualized and can be automated, but you have to do the intelligent stuff yourself, translating networking requirements for the IT environment into a stable, flexible, secure and most of all performing network. With the introduction and further evolution of public cloud this concept has changed quite a bit.

Ready-made

I’m using AWS as a reference now because that’s the cloud I know best, but the point I’m trying to make is just as relevant for Azure, and probably GCP, OCI and any other large provider. The great thing is that these providers have many excellent networking services for you to pick from, enabling you to deploy and start using them within seconds or minutes.

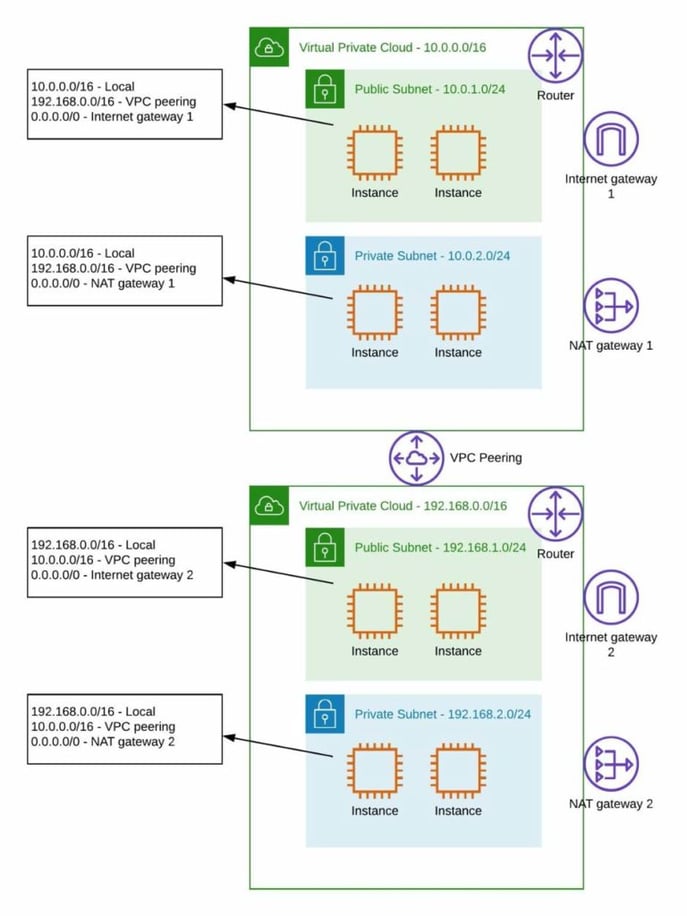

So no longer extensive discussions and drawing sessions, multiple design versions and testing plans, in AWS you have the Virtual Private Cloud (VPC) to deploy your services in and separate it logically from other customers, and that’s it. You can choose the geographical region you want to deploy it in, and over multiple Availability Zones ((groups of) datacenters) if you require redundancy, together with some small configuration options, but not the flexible (complex?) set of dozens of datacenter vlans with an accompanying hierarchy you were used to.

Routing example

The router has always been a central component attached to layer 2 domains to interconnect them. Within the VPC this concept has been split up into simple routing tables, each of these can be attached to a subnet or component so it’s no longer that device you can SSH into to configure or troubleshoot.

How about loadbalancing?

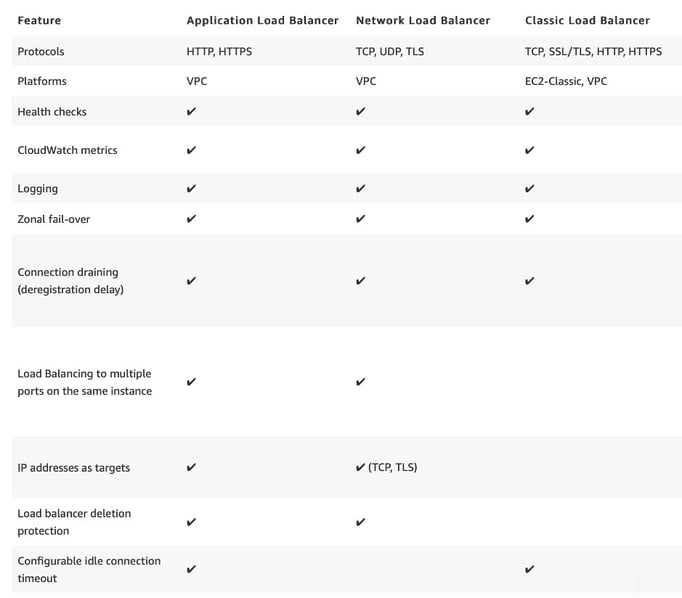

Let’s take loadbalancers as a next example. Within a datacenter network you can deploy it as a dedicated physical or virtual machine, but first you have to buy it. The great thing is that you have many brands and features at your disposal to configure it exactly as your network and/or application requires.

Choosing the right one however is often a frustrating operation where you have to compare several vendors with their unique functions, different pricing and operating models, and choose the one you’re sure of that it will fulfil the organization’s needs for at least five years. Never an easy task.

AWS makes it much simpler, just choose one of the Elastic LoadBalancers (Classic Loadbalancer, Network Loadbalancer or Application Loadbalancer). Each has its own features, use-cases and best practices, with some differences in functionalities, a subset:

The great thing is that you just have to choose and deploy it, and only making a maximum of a dozen or so configuration choices. From that point on AWS takes care of the availability, scaling and patching of the service.

There is always the possibility to deploy a loadbalancer solution from the Marketplace or even install the software yourself on an EC2 instance (virtual machine), but I would strongly advice against this, unless there is a very good use case or application/business requirement for doing so, because besides additional costs once again you become responsible for the sizing, monitoring/availability, performance and patching of this instance, something you don't have to worry about with any of the AWS managed Elastic LoadBalancers.

WAN differences

Now let's take WAN connectivity as a second example. In the datacenter networking world you have different technologies to choose from, connecting your office to the datacenter using a dark fiber or leased line, at any bandwidth you require. You can close a contract with every vendor you want, as long as they are physically able to bring a cable into your office and datacenter.

Over this cable you can define a layer 2 network, a layer 3 with routing like BGP, OSPF or IS-IS, or even an overlay protocol. There is complete freedom, you just have to make a clear agreement with the WAN vendor what type of packets you want to send and how these should be handled. Just like the loadbalancer example lots of options to choose from which should lead to a solution that is a best fit for your requirements, but also a lot of thinking and designing is required, and the risk of making a wrong decision or estimate somewhere in the process.

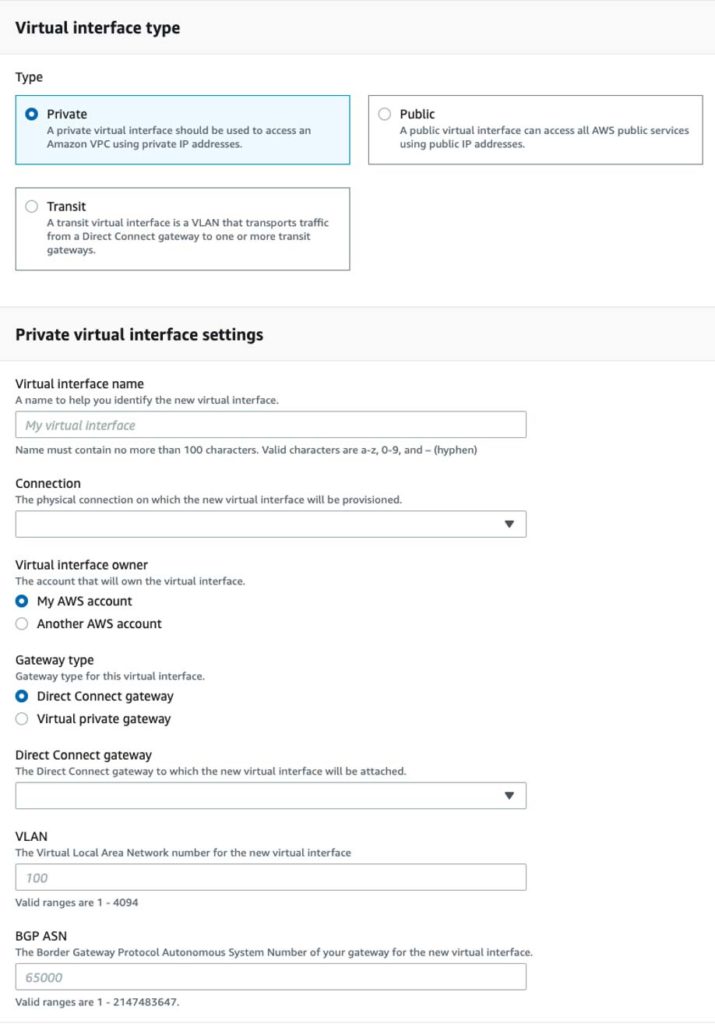

How easy has this become with Public Cloud: AWS offers Direct Connect, that's it. It is only delivered by selected WAN suppliers and with very specific properties. Of course there are a few choices to be made; what are the bandwidth and failover requirements, following from that do I want a dedicated or a hosted connection, do I require Private and/or Public Virtual Interfaces as logical connections, but it's always layer 3, always BGP. The same goes for ExpressRoute on Azure.

Only a handful of options to configure for creating a Direct Connect Virtual Interface

How about the money?

Last example: costs. In the datacenter we own the switches, routers and firewalls and likely pay a monthly fee for our internet and WAN connections, so costs are stable. Once again not in Public Cloud. You buy and therefore own nothing, some services are for free like the Virtual Private Cloud (VPC), Subnets, use of public IP addresses and VPC Endpoints. Other networking services like NAT Gateway, Internet Gateway and Elastic Loadbalancer are charged per running hour and data transfer.

Also, outbound data to internet, on-premises and between Availability Zones and Regions has a fee per Gigabyte. Now applications are no longer deployed in one or two of your datacenters, they can just as easily be deployed in Europe, Asia or the US when there's a good business rationale to do so. Rates will differ between regions.

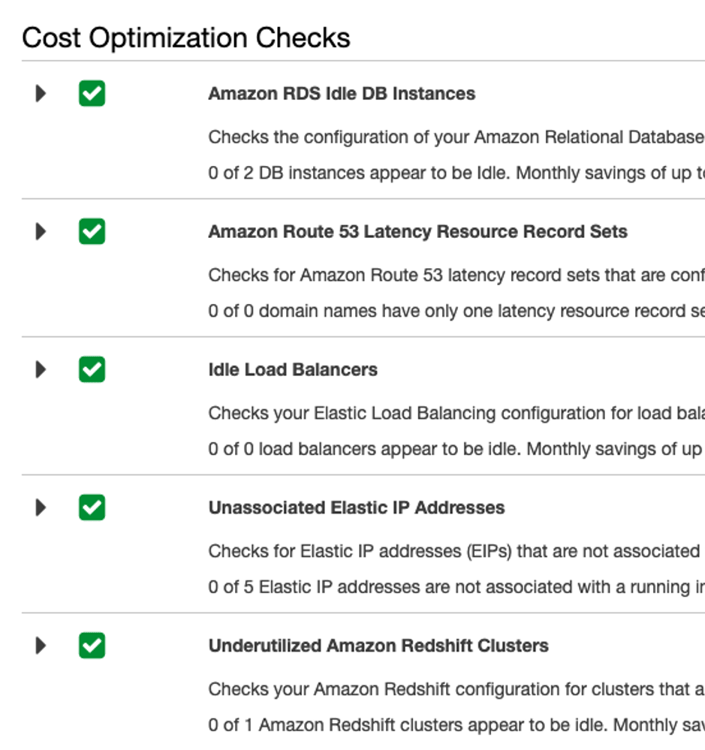

Suddenly as a network architect/engineer you'll be faced with a pay-per-use model and have to think carefully of design and build choices you make, instead of using the available physical datacenter/WAN bandwidth in any way you see fit. In your datacenter you won't be charged for some unused fiber cable still being connected, vlans or public IP addresses that aren’t employed, in a Public Cloud environment many unused resources will keep appearing on the monthly bill and are potential cost savings.

In a properly governed cloud environment these costs will also be reported upon on a regular basis, and responsible departments held accountable. So, to conclude keeping a regular eye on networking costs, acting on them and rethinking solutions to make them cheaper suddenly becomes a priority task.

Change of mentality

The point I'm trying to make in this blog is that when you're a 'traditional' networking engineer/architect, there is a paradigm shift once you start learning about and working with Public Cloud networking. Yes, it's still just moving IPv4 or IPv6 packets between systems, but the way we have to work with the services delivering this transport is quite different.

Many functionalities and services are at your disposal as building blocks, you just have to deploy and connect them together to establish connectivity. Of course there's some important designing and thinking to be done, especially in large environments spanning multiple regions, but not in the level of detail and flexibility you are accustomed to.

Every AWS networking service has its limitations, so where in the datacenter you could fix almost every challenge with some command line creativity and perhaps an occasional extra device, within the public cloud this simply won't work, the options you are used to aren't there. On the other hand, new services and functions are announced and released on a weekly basis, so keep a close eye on these improvements as they may make your life easier, or a solution more simplified or robust.

If you do happen to run into some limitation that could help you when this would be solved, and potentially other cloud customers as well, just contact your Public Cloud vendor technical or commercial liaison, and if there's sufficient demand they will very likely put it on the roadmap. From the other perspective, nowadays you see cloud/devops engineers deploying Public Cloud networking services who have never created a vlan or configured a switchport or designed a firewall cluster.

For them it's very useful to gain some in-depth networking knowledge on how packet forwarding is processed 'under the hood', or their creation may be suboptimal from for example a performance, cost or security perspective. Sharing knowledge is the key to get the most out of all these great new concepts that are put at our disposal.